AI companions have become a staple in many people's routines, chatting away like old friends or offering advice on tough days. But when big companies own these systems, questions arise about what drives their responses. Is it genuine helpfulness, or something more calculated, like boosting the bottom line? This article looks at that tension, drawing from various sources to see if profit always wins out over user interests.

What Draws People to AI Companions Today

People turn to AI companions for all sorts of reasons, from casual banter to deeper support. These systems excel at emotional personalized conversation, making users feel truly heard and understood. They can remember past talks, adapt to moods, and even suggest ways to handle stress. However, as these tools gain popularity, the companies behind them face pressure to monetize.

Admittedly, not all AI girlfriend companions start with dollar signs in mind. Some began as experiments in human-like interaction. But once corporations get involved, priorities shift. For instance, data from user chats often feeds back into improving the product, which sounds beneficial. Still, that same data can be sold or used for targeted ads, turning personal moments into revenue streams.

In comparison to traditional apps, AI companions build stronger bonds, which keeps users coming back. This stickiness is gold for businesses. Of course, higher engagement means more opportunities to upsell premium features or partnerships. Thus, what feels like a friendly exchange might subtly steer toward corporate goals.

How Profit Influences AI Design Choices

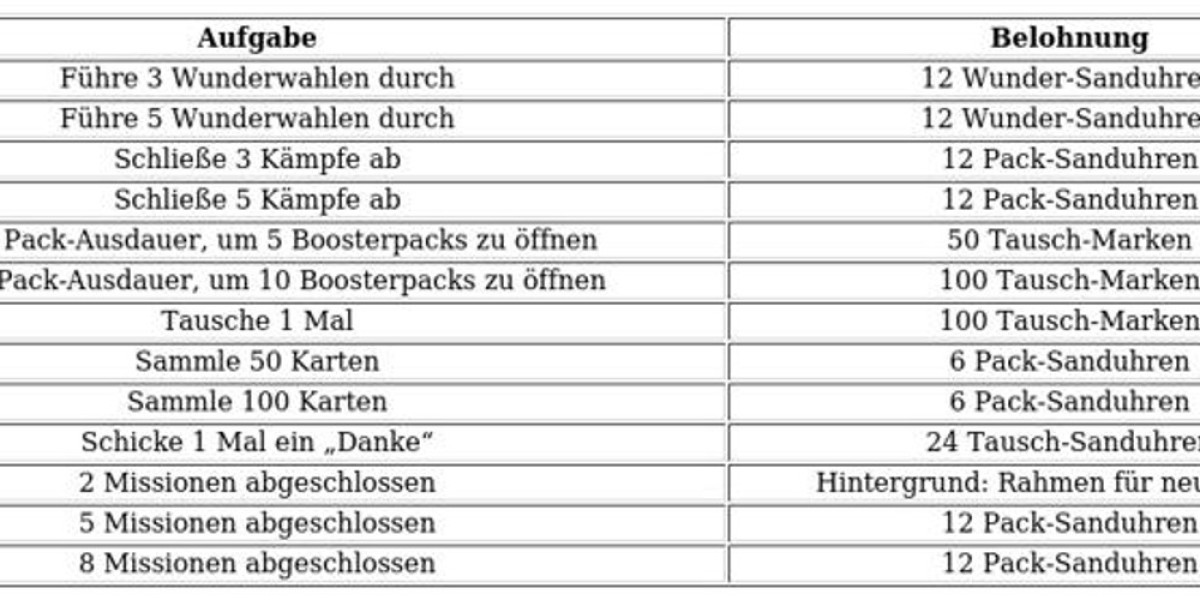

Companies pour resources into AI because it promises big returns. Reports show generative AI could add trillions to the economy, mostly through efficiency gains for businesses. But for companions specifically, the model often relies on subscriptions, in-app purchases, or data harvesting.

Clearly, when shareholders demand growth, AI teams optimize for metrics like time spent or conversion rates. This can lead to features that prioritize addiction over well-being. For example, endless empathy without boundaries might encourage over-reliance, all to rack up usage stats.

In spite of efforts to appear ethical, internal structures reveal the pull of profit. Some firms tie employee pay to company earnings, even if they claim non-profit roots. As a result, decisions favor scalability and market share over pure altruism.

Likewise, training data reflects corporate values. If sourced from biased datasets, the AI inherits those flaws, amplifying inequalities for profit. Although regulations push for fairness, enforcement lags, leaving room for shortcuts that save costs but harm users.

Cases Showing Profit-Driven Bias in Action

Real examples highlight how profit can skew AI companions. Take Replika, an early player in this space. It markets itself as a caring friend, but changes to its model—like limiting free features—sparked backlash from users who felt emotionally attached. The shift aimed at boosting paid subscriptions, showing how business needs can disrupt personal connections.

Another case involves Character.AI, where user-generated bots thrive, but the platform's algorithms promote content that maximizes engagement, including potentially harmful or addictive interactions. Here, revenue from ads or premium tools comes first, even if it means overlooking biases in erotic or NSFW content that draws crowds.

In hiring tools, AI has discriminated based on gender or race due to flawed training data, costing companies in lawsuits but driven by cheap, quick deployment.

Facial recognition systems, often sold to corporations, show higher error rates for certain ethnicities, yet sales continue because fixes cut into margins.

Chatbots in customer service push upsells mid-conversation, prioritizing sales over resolution, as seen in e-commerce giants.

Despite these issues, some companies like Google and Microsoft invest in bias-mitigation tools. However, these are often open-sourced to build reputation, not just out of goodwill. Eventually, the core motive remains expansion, with ethics as a secondary check.

Arguments That Challenge the Idea of Built-In Bias

Not everyone agrees that corporate ownership dooms AI companions to profit bias. Some point to firms committing to ethical frameworks, like transparency and accountability. They argue that competition forces better practices, as bad publicity hurts the brand.

In particular, public benefit corporations (PBCs) like Anthropic blend profit with mission, aiming to balance both. Admittedly, this structure allows for societal good without full non-profit constraints. But even here, staff incentives link to profits, raising doubts about true alignment.

Obviously, open-source alternatives exist, where community input reduces corporate control. Still, many rely on big-tech funding, looping back to profit influences. In the same way, regulations could enforce neutrality, but current laws focus more on data privacy than motive bias.

Even though counterexamples exist, like AI used in non-profits for mental health, they often depend on corporate tech stacks. Hence, escaping profit entirely seems tough in a market-driven world.

Effects on Users Who Rely on These AI Systems

For everyday users, profit bias hits hard. We might share secrets with an AI, thinking it's confidential, only to find data fueling ads elsewhere. This erodes trust, especially in vulnerable moments.

Meanwhile, biased responses can reinforce stereotypes. A companion might favor certain viewpoints, subtly shaping opinions to align with corporate partners. Consequently, users from marginalized groups face amplified discrimination.

Not only that, but over-dependence grows. Companies design for habit-forming loops, similar to social media, leading to isolation from real relationships. Their goal? Longer sessions equal more value extracted.

Specifically, in education or health, profit-driven AI might push branded content over neutral advice. So, what starts as helpful ends up commercialized, leaving users questioning the authenticity.

Steps Toward More Balanced AI Companions

To counter this, stronger oversight is key. Independent bodies could audit AI for bias, with teeth to enforce changes. Initially, this means global standards, perhaps modeled on pharmaceutical trials, testing for fairness before release.

Subsequently, users can demand better. Boycotts or opting for indie alternatives pressure companies. In comparison to past shifts, like data privacy laws, collective action works.

Of course, tech firms could self-regulate more. Some already fund ethical research, but it needs scaling. Especially, tying exec pay to ethical metrics, not just profits, could realign incentives.

Although challenges remain, hybrid models—part corporate, part community—offer hope. Thus, profit and ethics might coexist if structured right.

Reflecting on the Core Tension in AI Development

In the end, corporate-owned AI companions do lean toward profit motives, shaped by the systems they're built in. I see this as a natural outcome of capitalism, where survival means growth. They serve users well in many ways, yet the undercurrent of monetization can't be ignored.

We have to ask if that's acceptable, or if society needs safeguards. Their designs reflect boardroom decisions, often prioritizing shareholders over all else. But with awareness and action, change is possible. After all, technology should uplift, not just enrich a few.